- Published on

Java Concurrency: Data, Resource Sharing and Thread Pools

Table of Contents

Note: If you haven't read the first part of this series, check out Part 1: Java Concurrency Fundamentals.

What is Resource Sharing?

Resource sharing is a fundamental concept in concurrent programming where multiple threads access and potentially modify shared data or resources simultaneously.

Data and Resources Sharing

As resources can be any representation of datas or objects. i.e it can be any variables, flags, Arrays, File, IO devices, connections etc .. .

The very basics of datas in java are often grouped into two main memory regions. Heap and Stack. (For more: Dive into "Java Memory Model")

| Heap | Stack |

|---|---|

| Data lives here and can be used by any parts of the application. | Here Methods get pushed-in and popped-out. Short-lived and only used by one thread of execution (i.e main thread or other thread). |

| Objects, Class members, Static variables. | Local variables( primitive types and references). |

In a multithreaded environment where multiple tasks are executing concurrently, working with the resources/data isn’t straight forward. A Complex relation between multiple threads, not handled properly can causes anomalies like Race conditions, Data race and Deadlock”.

- Unpredictable thread interactions

- Data inconsistency

- Race conditions

- Deadlock

- Performance bottlenecks

Race conditions, Data race and Deadlock

Race conditions:

When two or more threads access shared data and try to change it at the same time (modify, write at the same time). In short and clear - both threads "racing" to access/change the data.

Race Condition Demonstration

In this example, we'll illustrate a classic race condition scenario where multiple threads attempt to increment a shared counter:

import java.util.ArrayList;

import java.util.List;

public class A3_RaceConditions {

public static void main(String[] args) throws InterruptedException {

List<Thread> threads = new ArrayList<>();

// Creating 10 "IncrementerTask" threads and starting them

IncrementalData incrementalData = new IncrementalData();

for (int i = 0; i < 10; i++) {

Thread thread = new Thread(new IncrementerTask(incrementalData));

thread.start();

threads.add(thread);

}

// Wait until all threads are finished

for (Thread thread : threads) {

thread.join();

}

// Lets Check the final result

System.out.println("Result value: " + incrementalData.getValue());

}

public static class IncrementerTask implements Runnable {

private IncrementalData incrementalData;

public IncrementerTask(IncrementalData incrementalData) {

this.incrementalData = incrementalData;

}

@Override

public void run() {

for (int i = 0; i < 10000; i++) {

incrementalData.increment();

}

}

}

public static class IncrementalData {

private int value = 0;

// The critical section

public void increment() {

// Read current value and add 1 to it

this.value = value + 1;

}

public int getValue() {

return value;

}

}

}

Key Characteristics of the Race Condition:

- Multiple threads attempt to modify a shared resource

- The

increment()method is not thread-safe - Each thread reads and writes the value independently

Code Analysis and Race Condition Explanation -- Unexpected Behavior:

- Each time we run this code, we will get different results

- Expected result: 10 threads × 10,000 increments = 100,000

- Actual result: Typically less than 100,000

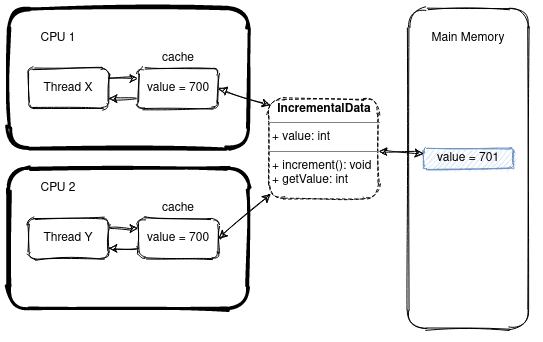

How the Race Condition Occurs:

- Thread-X reads the current value (e.g., 700)

- Thread-Y simultaneously reads the same value (700)

- Thread-X increments and sets value to 701

- Thread-Y also increments, setting value to 701

- The final result is incorrect (should be 702)

The Critical Section Problem:

- The

increment()method is a critical section - It reads the current value and adds 1

- No synchronization mechanism prevents concurrent access

NOTE

According to the Java Memory Model (JMM), an execution is said to contain a data race if it contains at least two conflicting accesses (reads of or writes to the same variable) that are not ordered by a happens-before relationship.

Why This Happens:

- Threads interleave their operations unpredictably

- No mechanism ensures atomic increment operation

- The read-modify-write sequence is not atomic

Implications:

- Inconsistent and unpredictable results

- Data integrity is compromised

- Demonstrates the need for proper synchronization

A data race happens when there are two memory accesses in a program where both target the same location. A race condition and data race is a considerable overlap: i.e many race conditions are due to data races, and many data races lead to race conditions.

Preventing Race Conditions

To prevent race conditions from occurring, we must first identify the critical section on the code and avoid it, using thread synchronization. Thread synchronization makes concurrent thread wait until the current working thread comes out of the critical section.

Thread synchronization can be done in java using Synchronized block, locks or atomic variables.

Using Synchronized on Object

Synchronized block or Synchronized method - uses object lock.

// 1. synchronized block on this

synchronized(this) {

this.value = value + 1;

}

// 2. synchronized method:

public synchronized void increment() {

this.value = value + 1;

}

// Following blocks of code 1 and 2 are practically equivalent.

// Even though the bytecode seems to be different, they do the same

Using Synchronized on Class

Static Synchronized block or Static Synchronized method - uses class object lock.

class MyClass {

public static void bar() {

synchronized(MyClass.class) {

doSomeOtherStuff();

}

}

}

// For static synchronized method these two blocks has same effect

class MyClass {

public static synchronized void bar() {

doSomeOtherStuff();

}

}

// Following blocks of code 1 and 2 are practically equivalent.

// Even though the bytecode seems to be different, they do the same

Synchronized lock is a Intrinsic locks and is reentrant in nature.

Implicit vs Explicit Locks

All “synchronized locks or monitor locks or intrinsic lock” using the keyword synchronized are “implicit locks”, whereas “explicit locks” are specified by the Lock interface.

IMPORTANT

Implicit lock implements the reentrant characteristics. Reentrant means that the thread can re-enter another synchronized block on the same object. (i.e from inside a synchronized method it can call another synchronized method on the same object).

This means that if a thread attempts to acquire a lock it already owns, it will not block and it will successfully acquire it. For instance, the following code will not block when called bar() from inside foo(). i.e foo() { bar() }:

public void bar(){

synchronized(this){ // thread already owns the lock and it is reentrant

...

}

}

//

public void foo(){

synchronized(this){

bar();

}

}

Explicit locks support more control and thus are expressive. Some of the commonly used explicit locks on java are ( ObjectLock, ReentrantLock, ReadWriteLock ...).

Using different locks

import java.util.ArrayList;

import java.util.List;

public class A3_SynchronizationLocks {

public static void main(String[] args) throws InterruptedException {

List<Thread> threads = new ArrayList<>();

// GenericSavingFund instance for Client Bank-A

GenericSavingFund arcSavingFund = new GenericSavingFund();

GenericSavingFund flSavingFund = new GenericSavingFund();

for (int i = 0; i < 10; i++) {

Thread thread = new Thread(new FundingClients(arcSavingFund));

thread.setName("Thread ArcSavingFund");

thread.start();

Thread thread2 = new Thread(new FundingClients(flSavingFund));

thread2.setName("Thread FLSavingFund");

thread2.start();

threads.add(thread);

}

// Wait until all threads are finished

for (Thread thread : threads) {

thread.join();

}

// Lets Check the final result

arcSavingFund.getDetails();

System.out.println("------------");

flSavingFund.getDetails();

}

public static class FundingClients implements Runnable {

private GenericSavingFund genericSavingFund;

public FundingClients(GenericSavingFund genericSavingFund) {

this.genericSavingFund = genericSavingFund;

}

@Override

public void run() {

for (int i = 0; i < 10000; i++) {

genericSavingFund.increaseSavingBalance();

double random = Math.random();

if( random > 0.95){

genericSavingFund.increaseMaturity();

}

}

}

}

public static class GenericSavingFund {

private int savingBalance = 0;

private int maturityYears = 1;

private static final int POLICY_MAX_MATURITY_YEARS = 25;

private Object lock1 = new Object();

private Object lock2 = new Object();

public void increaseSavingBalance() {

synchronized (lock1) {

System.out.println(Thread.currentThread().getName() + "Increasing current balance from " + savingBalance);

savingBalance = savingBalance + 5;

}

}

public void increaseMaturity() {

synchronized (lock2) {

System.out.println(Thread.currentThread().getName() + "Increasing current maturity from "+ maturityYears);

if (maturityYears < POLICY_MAX_MATURITY_YEARS) {

maturityYears++;

}

}

}

public void getDetails() {

System.out.println("Total Max maturity Year: " + POLICY_MAX_MATURITY_YEARS);

System.out.println("You completed " + maturityYears + " maturity years");

long remainingYears = (long) POLICY_MAX_MATURITY_YEARS - maturityYears;

String response = remainingYears==0 ? "Your balance is ready:" + savingBalance : "Please wait some years: " + remainingYears;

System.out.println(response);

}

}

}

#output

Total Max maturity Year: 25

You completed 25 maturity years

Your balance is ready: 500000

Let's say "GenericSavingFund" had made their critical section thread safe; using synchronization.

- Any number of clients can "increaseSavingBalance()" by 2 freely , or Add 1 year to maturity.

For now let's assume, we have Two clients who will create their own Saving Fund - Bank A SavingFund, Bank B SavingFund.

Each of them will create GenericSavingFund instance Bank-A: "arcSavingFund" , Bank-B: "flSavingFund"

Problem without synchronized block, in the middle of execution,

- lets say - balance = 5.0, years = 2

- Now, 1-"arcSavingFund" thread comes in and reads balance 5.0, and 2- "arcSavingFund" another thread comes in reads balance 5.0,

- add money to fund, final=7

- However 2 clients added money into the fund, it should have been 9 (since can add 2 freely).

Critical section here is - "increaseSavingBalance()" - that reads and updates value

Here, increaseSavingBalance() and increaseMaturity() are two independent task

- Previously, the same object cant call both synchronized methods at the same time,

- i.e arcSavingFund.increaseSavingBalance() and arcSavingFund.increaseMaturity()

Since this two methods are independent tasks,

- And we see they operate on different resources - one is savingBalance another maturityYears.

- For this, we have used diffrent locks, as you saw in the code

- i.e arcSavingFund.increaseSavingBalance() and arcSavingFund.increaseMaturity() is possible now, using two different locks

Since, we are using synchronized blocks and locks for protecting our critical section. Sometimes, trying to protect these critical sections, thread needs to wait for the lock to be released by other threads and vice versa, and they depend on each-other resulting into Deadlock.

Deadlock can happen when multiple threads need the same locks but acquire them in different orders. For example, if Thread A locks Resource 1 and then attempts to hold Resource 2, while Thread B locks Resource 2 and then attempts to hold Resource 1, neither thread can proceed, resulting in a deadlock.

NOTE

A deadlock in Java occurs when two or more threads are each waiting to enter a critical section protected by locks that the other threads hold. This situation prevents any of the involved threads from proceeding, causing the program to freeze.

Steps to Avoid or Recover from Deadlock:

- Consistent Lock Order: Acquire multiple locks in a fixed sequence to prevent circular waits.

- Lock Timeouts: Use timeouts when acquiring locks, allowing threads to back off and retry if locks aren't obtained promptly.

- Minimize Lock Scope: Keep critical sections short and lock only necessary resources to reduce lock holding time.

- Deadlock Detection and Recovery: Monitor for deadlocks and implement strategies like restarting threads or releasing locks to resolve them.

ReentrantLock:

ReentrantLock is a mutual exclusion lock with the same basic behavior as the implicit monitors and comes with extended capabilities. This lock implements reentrant characteristics as implicit locks.

ReadWriteLock:

The interface ReadWriteLock maintains a pair of locks for read and write access. Read-lock can be held simultaneously by multiple threads as long as no threads hold the write-lock. This can improve performance and throughput in case that reads are more frequent than writes.

[See: ReentrantLock fairness, tryLock(), ReaderWriter Problem, Dining Philosopher problem]

Semaphore

Semaphore is used for restricting the number of access to resources, using sets of permits. Its core functionality includes acquire() and release() method, useful in scenarios where we have to limit the amount of concurrent access.

// Semaphore semaphore = new Semaphore (No. of available permits);

Semaphore semaphore = new Semaphore (1); // Binary Semaphore

// acquiring the permit / lock

semaphore.acquire();

// releasing the permit / lock

sesemaphorem.release();

Semaphore doesn't have a notion of owner thread, so it's not reentrant in nature.

[See: Producer-Consumer Problem using wait()-notify() And also using Semaphore]

What's Next: Thread Pooling, Executors, and Scheduling in Java

Manually creating and managing threads gives you fine-grained control but can be cumbersome and inefficient for complex applications. Java's Executor framework helps streamline this by handling thread creation, reuse, and scheduling for you.

1. Thread Pooling

A thread pool reuses a fixed number of threads to execute tasks from a queue. This prevents overhead associated with continually creating and destroying threads. Thread pools also help you manage task backlogs gracefully.

NOTE

Key Benefits of Thread Pools

- Reduced overhead of thread creation/destruction

- Better throughput under heavy load

- Ability to limit concurrency (avoid excessive threads)

- Simplified error handling for tasks

2. Executor and ExecutorService

The Executor interface (and its sub-interface ExecutorService) allows you to submit tasks (Runnable or Callable) for asynchronous execution.

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class ExecutorExample {

public static void main(String[] args) {

// Create a fixed thread pool of size 3

ExecutorService executorService = Executors.newFixedThreadPool(3);

// Submit tasks

for(int i = 1; i <= 5; i++){

final int taskNumber = i;

executorService.submit(() -> {

System.out.println("Executing Task " + taskNumber + " with Thread " + Thread.currentThread().getName());

});

}

// Shutdown the executor after submitting tasks

executorService.shutdown();

}

}

TIP

You can also use:

newCachedThreadPool(): Expands threads as needed; suitable for many short-lived tasks.newSingleThreadExecutor(): Only one worker thread.newWorkStealingPool(): Based on the number of available processors (uses ForkJoinPool under the hood).

3. ScheduledExecutorService

To schedule tasks periodically or after a delay, Java provides the ScheduledExecutorService. This is useful for recurring background operations like cleanup tasks, sending periodic metrics, or timed notifications.

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class ScheduledExecutorExample {

public static void main(String[] args) {

ScheduledExecutorService scheduler = Executors.newScheduledThreadPool(2);

// Schedule a one-time task (runs after 5 seconds)

scheduler.schedule(() -> {

System.out.println("Running after 5 seconds delay");

}, 5, TimeUnit.SECONDS);

// Schedule a recurring task (first run after 2 seconds, repeats every 3 seconds)

scheduler.scheduleAtFixedRate(() -> {

System.out.println("Repeating task: " + System.currentTimeMillis());

}, 2, 3, TimeUnit.SECONDS);

// Optionally, schedule a repeating task with a fixed delay between runs

// scheduler.scheduleWithFixedDelay(runnable, initialDelay, delay, TimeUnit.SECONDS);

// The scheduler keeps running; in a real app, you may decide when to shut it down

// scheduler.shutdown();

}

}

CAUTION

Shutdown Strategy If you never call shutdown(), your application may not exit normally because the scheduled threads remain active. Use shutdown() or shutdownNow() when appropriate.

4. Fork/Join Framework (Parallelism)

The Fork/Join framework (java.util.concurrent.ForkJoinPool) splits a large task into subtasks (fork) and merges the results (join). It optimizes usage of CPU cores, especially for tasks that can be broken down recursively.

import java.util.concurrent.RecursiveTask;

import java.util.concurrent.ForkJoinPool;

public class ForkJoinExample {

static class SumTask extends RecursiveTask<Long> {

private static final int THRESHOLD = 10_000;

private int[] arr;

private int start, end;

public SumTask(int[] arr, int start, int end) {

this.arr = arr;

this.start = start;

this.end = end;

}

@Override

protected Long compute() {

// If task is small enough, compute directly

if (end - start <= THRESHOLD) {

long sum = 0;

for (int i = start; i < end; i++) {

sum += arr[i];

}

return sum;

} else {

// Split task into halves

int mid = (start + end) / 2;

SumTask leftTask = new SumTask(arr, start, mid);

SumTask rightTask = new SumTask(arr, mid, end);

// Fork child tasks

leftTask.fork();

long rightResult = rightTask.compute();

long leftResult = leftTask.join();

return leftResult + rightResult;

}

}

}

public static void main(String[] args) {

int size = 100_000_000;

int[] array = new int[size];

for (int i = 0; i < size; i++) {

array[i] = 1; // fill with ones

}

ForkJoinPool pool = new ForkJoinPool();

SumTask task = new SumTask(array, 0, array.length);

long sum = pool.invoke(task);

System.out.println("Sum = " + sum);

}

}

IMPORTANT

When to Use Fork/Join

- Large data sets that can be divided into independent subproblems.

- Recursive algorithms like merge sort, quick sort, or advanced parallel computations.

5. Beyond Thread Pools: Futures and CompletableFuture

While the ExecutorService handles asynchronous tasks, sometimes you need to compose or chain asynchronous results. Futures and CompletableFuture help with that.

Basic Future

A Future<T> represents a result that will eventually be available. You can submit a Callable<T> to an ExecutorService and get a Future<T> back. However, the basic Future interface only allows you to check if the task is done or cancel it—not chain or combine results easily.

CompletableFuture

CompletableFuture<T> extends Future<T> and provides methods to handle callbacks, chain transformations, and combine multiple asynchronous tasks.

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.ExecutionException;

public class CompletableFutureExample {

public static void main(String[] args) throws ExecutionException, InterruptedException {

// Start an asynchronous computation

CompletableFuture<String> futureGreeting = CompletableFuture.supplyAsync(() -> {

try {

Thread.sleep(1000);

} catch (InterruptedException ignored) {}

return "Hello from the future!";

});

// Chain a transformation

CompletableFuture<String> finalFuture = futureGreeting

.thenApplyAsync(greeting -> greeting + " (Appended text)");

// You can do other work here...

System.out.println("Main thread is free to do other tasks...");

// Block and get the result (for demo purposes)

String result = finalFuture.get();

System.out.println("Result: " + result);

}

}

TIP

CompletableFuture Methods

supplyAsync(): Run a supplier asynchronouslythenApplyAsync(): Transform the resultthenCompose(),thenCombine(): Merge multiple async operationsallOf(),anyOf(): Combine multiple CompletableFutures

6. Parallel Streams

Parallel streams (introduced in Java 8) allow data processing tasks to be automatically split and executed in parallel. Under the hood, parallel streams often use the ForkJoinPool.

import java.util.stream.LongStream;

public class ParallelStreamsDemo {

public static void main(String[] args) {

long sum = LongStream.rangeClosed(1, 10_000_000)

.parallel()

.reduce(0, Long::sum);

System.out.println("Parallel sum = " + sum);

}

}

CAUTION

Parallel Streams Considerations

- Not all workloads benefit from parallelization

- Overhead can be significant for smaller data sets

- Be mindful of combining result costs

7. (Preview) Project Loom: Virtual Threads

Java's Project Loom aims to introduce virtual threads, which are lightweight threads managed by the JVM rather than by the OS. Virtual threads make blocking calls cheap in terms of resource usage, potentially simplifying concurrency.

// Example of virtual threads (preview feature)

public class VirtualThreadDemo {

public static void main(String[] args) throws InterruptedException {

// Create a large number of virtual threads

for (int i = 0; i < 100_000; i++) {

Thread.startVirtualThread(() -> {

try {

// Simulate some I/O or blocking operation

Thread.sleep(100);

System.out.println("Virtual thread: " + Thread.currentThread());

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

});

}

}

}

Conclusion

Java's concurrency landscape continues to evolve:

- Futures & CompletableFuture for composing async tasks

- Parallel Streams for automatic parallel data processing

- Project Loom (preview) promising simplified massive concurrency